Speed up your machine learning with Google Cloud + TensorFlow + GPU

A step by step guide to setup TensorFlow GPU version on Google Compute Engine and train your models on the cloud

Hello everyone! I hope you are having a great day. It may quickly become a slow day if you experience that your old computer is taking forever to train your machine learning model.

If your dataset is small, then you might finish the job within a day on a good day but it may quickly escalate to several days or weeks. I faced the same issue when working on one of my projects. It becomes worse if you are training several models with different parameters as the run time increases linearly.

You can reduce the run time greatly if you use a GPU but not every GPU supports this. If you have an NVIDIA GPU with CUDA support, there is a high chance that you can use it for the task but general-purpose gaming GPUs are not well suited compared to a high-end GPU that is specifically built for large scale computations. To use the TensorFlow GPU version, you should have a 64-bit rig and a GPU with Compute Capability > 3.

There is no point spending a fortune to build a high-end rig with a high-end GPU if you only need it to train machine learning models for a short period. In this article, I explain how to “borrow” a high-end GPU from Google Cloud Platform (not free but cheap), set it up to run the TensorFlow GPU version and get your model trained. Buckle up!

Prerequisites

- Google Cloud Platform account

- Basic Linux terminal commands knowledge

- Patience

Setting up a VPS on Google Cloud

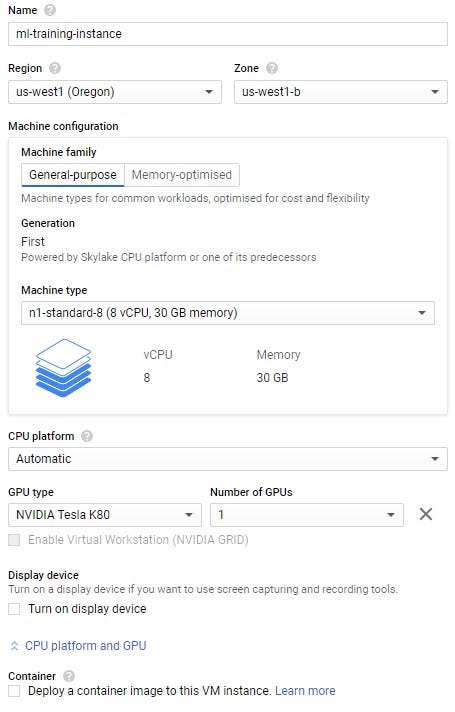

This is pretty straightforward. Sign in to your Google Cloud account, navigate to the Compute Engine dashboard, click Create Instance. The minimum specifics would be as follows. Note that the maximum number of CPU cores allowed by GCP (if you also use a GPU) is 8.

I am creating an “n1-standard-8” template instance with 8 CPUs, 30GB RAM, and an NVIDIA Tesla K80. Now, this bad boy is more than enough for your day-to-day needs. Make sure you select Ubuntu 18.04 LTS as the operating system and increase the HDD space to 30GB. Then tick the Firewall options at the bottom. At the time of this writing, this instance will cost you $0.583 per hour. Pretty cheap right? Do not worry. You only pay for what you use.

Note: Allocating a GPU from Google Cloud’s resource pool is usually not allowed for new accounts. There are 2 things you can try.

- Select a different Region with GPUs available.

- Ask Google to increase your resource quota.

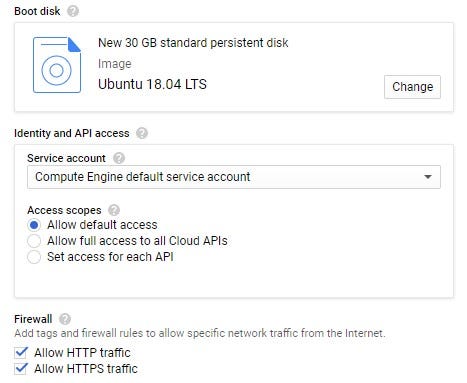

At this point, I think you have created your instance successfully. Now go back to the dashboard, click the SSH button. This will open a new browser window with an SSH session to your instance.

Now the rest is pretty much a copy-paste session. These commands did work for me without any issues but in case you encounter a problem, your Linux terminal handling skills would come in handy!

Installing CUDA and cuDNN drivers

Update the package lists.

sudo apt-get updateGet rid of any previous NVIDIA installations.

sudo apt-get purge nvidia*

sudo apt-get autoremove

sudo apt-get autoclean

sudo rm -rf /usr/local/cuda*Install CUDA 10.0

sudo apt-key adv --fetch-keys http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/7fa2af80.pub

echo "deb https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64 /" | sudo tee /etc/apt/sources.list.d/cuda.listsudo apt-get update

sudo apt-get -o Dpkg::Options::="--force-overwrite" install cuda-10-0 cuda-drivers

Now restart the Google Cloud Instance.

sudo rebootOnce its back on,

echo 'export PATH=/usr/local/cuda-10.0/bin${PATH:+:${PATH}}' >> ~/.bashrc

echo 'export LD_LIBRARY_PATH=/usr/local/cuda-10.0/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}' >> ~/.bashrc

source ~/.bashrc

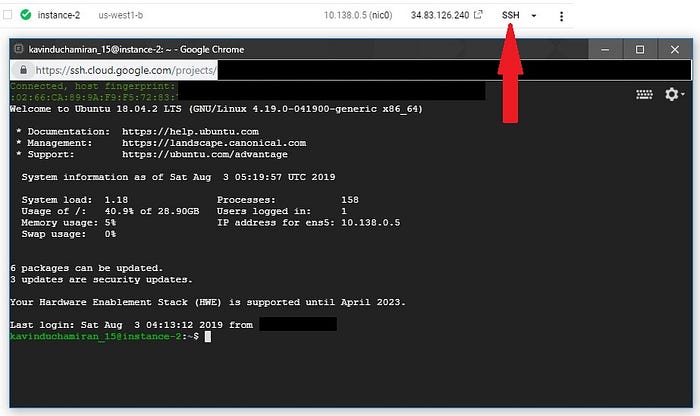

sudo ldconfigYou can check whether your installation is successful by,

nvidia-smi

Now it’s time time to install cuDNN. We are using cuDNN 7.5.0 which is compatible with CUDA 10.0. You need a computer with a GUI interface for this.

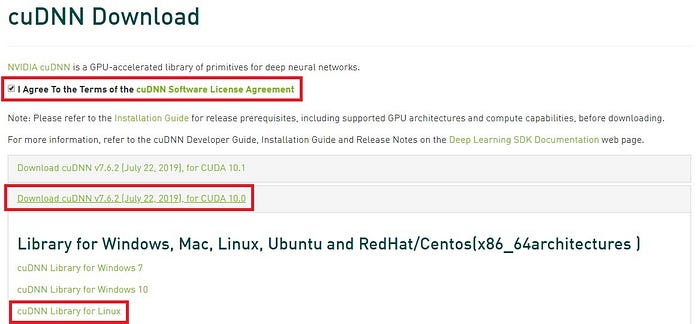

- Go to https://developer.nvidia.com/cudnn

- Sign up and agree to their license agreement.

- Select the “cuDNN Library for Linux” under “Download cuDNN v7.6.2 (July 22, 2019), for CUDA 10.0”.

Sadly, copy-pasting this link on your SSH terminal will not download the library package. On your GUI, initiate the download, then quickly cancel it and copy the download link from your download manager. It would look something like this.

https://developer.download.nvidia.com/compute/machine-learning/cudnn/secure/v7.5.0.56/prod/10.0_20190219/cudnn-10.0-linux-x64-v7.5.0.56.tgz?{some random authentication key}A download link with an authentication key attached to the end.

Now copy-paste this on your SSH terminal.

wget https://developer.download.nvidia.com/compute/machine-learning/cudnn/secure/v7.5.0.56/prod/10.0_20190219/cudnn-10.0-linux-x64-v7.5.0.56.tgz?{some random authentication key}mv 'https://developer.download.nvidia.com/compute/machine-learning/cudnn/secure/v7.5.0.56/prod/10.0_20190219/cudnn-10.0-linux-x64-v7.5.0.56.tgz?{some random authentication key}' library.tgz

Replace {some random authentication key} with your link.

Now extract the library files and copy them to CUDA 10.0 installation directory.

tar -xf library.tgzsudo cp -R cuda/include/* /usr/local/cuda-10.0/include

sudo cp -R cuda/lib64/* /usr/local/cuda-10.0/lib64

You need to install one more dependency.

sudo apt-get install libcupti-dev

echo 'export LD_LIBRARY_PATH=/usr/local/cuda/extras/CUPTI/lib64:$LD_LIBRARY_PATH' >> ~/.bashrcNow the drivers installation part is done. What’s left is to set up the python TensorFlow libraries and verify that everything’s working fine.

Installing TensorFlow GPU python library

First, install pip3 and other dependencies.

sudo apt-get install python3-numpy python3-dev python3-pip python3-wheelUninstall any TensorFlow CPU version installations and install TensorFlow GPU version

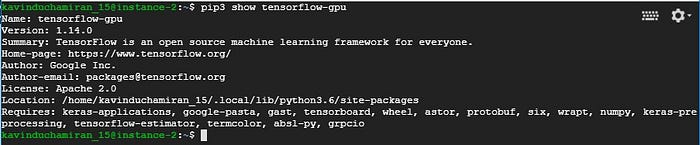

pip3 uninstall tensorflowpip3 install --user tensorflow-gpu==1.13.1pip3 show tensorflow-gpu

Your output should be similar to this.

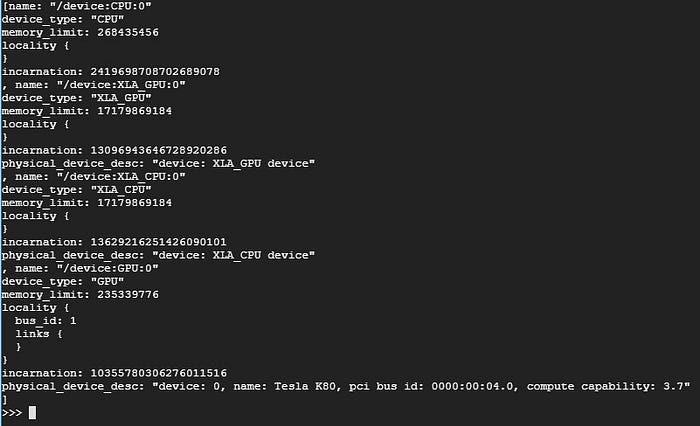

Run the following command to see if python3 can recognize TensorFlow and the GPU attached to your instance.

>> from tensorflow.python.client import device_lib

>> print(device_lib.list_local_devices())

Now all that’s left is to run your python3 code to initiate your model training on TensorFlow GPU. Make sure you add the following environment variable before python3. You can also use tmux if you want to let your model training run, close the SSH terminal and go have a cup of coffee, then come back and continue your terminal session from where you left.

tmuxCUDA_VISIBLE_DEVICES = 0 python3 train_my_model.py# CTRL+D -> B to exit tmux session. You can resume it by typing,tmux attach

Downloading the trained model

To download your trained models to your local computer (old but not forgotten),

Zip the model folder.

apt-get install zipcd /to/model/directory/one/level/upzip -r model.zip model_directory

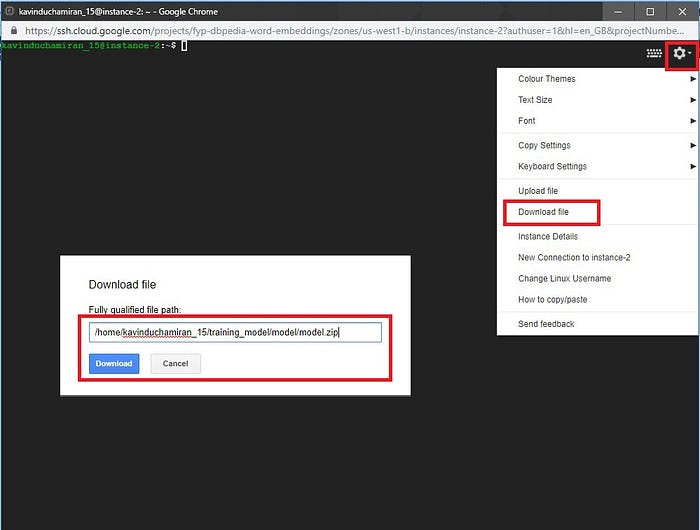

Get the absolute path to your zipped file. It should look like this.

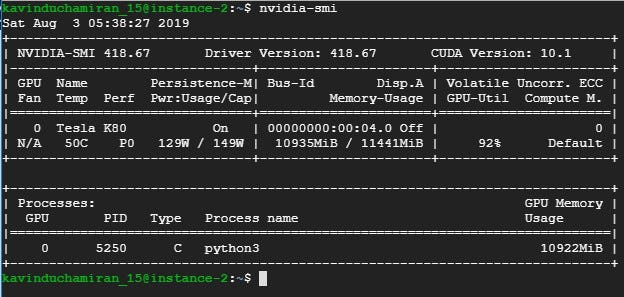

/home/kavinduchamiran_15/training_model/model/model.zipClick the Gear icon on the top right of your SSH window. Then “Download file” and paste in your file path.

You can also use the “Upload file” option to upload your training data if it is too big to push through Git. It will be uploaded to your /home/username.

Conclusion

That’s pretty much it. If you encounter any problems, Google is your friend.

Let’s meet with another article again. Until then, happy training!